How Nvidia Grows: The Engine for AI and The Catalyst Of The Future

A pure strategic masterclass from Nvidia's trillion-dollar accelerated computing empire

👋 Welcome to How They Grow, my newsletter’s main series. Bringing you in-depth analyses on the growth of world-class companies, including their early strategies, current tactics, and actionable product-building lessons we can learn from them.

Hi, friends 👋

Get comfy, and hold onto your seats.

I won’t mince words here. Nvidia—the most valuable company by market cap that we’ve tackled so far—is not just a behemoth, but one of the most important companies on the planet. Analyzing a company this size means I’m either going to get caught with my pants down trying to tell this story well enough, or, if pulled off, I imagine this will be one of the more successful deep dives I’ve done.

As always, you’ll be the judge of that. ✌️

We all know Nvidia has taken the spotlight in the news recently, and for good reason. On the surface, it’s because their stock has been on an absolute tear.

Just take May 25th, when they added $184 billion to their market cap in a single day. That’s more than the value of SHEIN, Stripe, and Canva combined.

And it’s not just a price run with nothing behind it. In their latest second-quarter earning report, their revenue was $13.51 billion, up 101% YoY and up 88% from Q1. They also have $16B in cash/liquid assets. That’s wild.

Of course, this growth has been because Nvidia is playing a huge role in this AI revolution. But I don’t need to tell you that with a 30-year-old company valued at $1.2 trillion—there’s more to the story.

So, my curiosity sent me on a 30-hour researching and analyzing spree, going over everything I could get my hands on to bring you my armchair analysis of the world's 6th most valuable company.

I’ll summarize this 7K word deep dive for you in three words: a strategic masterclass.

Jensen Huang—the Taiwanese-born, leather-jacket-rocking, and pimped-up-Toyota-Supra-driving founder and CEO of Nvidia—is a man who’s bet the entire future of Nvidia not once, but three times. And, he may well do it again.

Jensen is a seismic wave-spotting genius, and arguably one of the best strategic executors I’ve come across. He has a resume of making deep and accurate analyses of Nvidia’s competitive and macro environment, developing very clear and well-defined strategic visions, and addressing all potential strategic bottlenecks with policies and coherent actions that guide Nvidia forward.

But, there’s a good chance you’ve never heard his name until now because he doesn’t poke at other CEOs about cage-fighting, and he doesn’t get people all riled up. He thinks, strategizes, and he humbly gets to work building for the future.

I learned so much from studying him and Nvidia. I hope you will too.

Now, just to tease what we’ll be getting much deeper into…

Nvidia’s invention of the Graphical Processing Unit in 1999 pioneered one of the most significant developments in modern technology: GPU-accelerated computing. With it, they also sparked the growth of the PC gaming market, redefined modern computer graphics, and revolutionized parallel computing; allowing computers to do way more.

In the past few years, Nvidia saw their first big growth surge with the crypto wave, where their computational hardware was essential for mining. But, after the hype cycle, revenue fell as crypto crashed and the gaming industry slummed.

Then along came ChatGPT, producing a tidal wave of interest in the AI sector, especially Generative AI. This ignited a race at various layers of the AI stack. While Google, Microsoft, Meta, OpenAI, Anthropic, and other AI players battle it out, Nvidia rakes in the cash because their chips power pretty much all of them. And they’re benefiting from this increased demand more than anybody else because Nvidia’s specialized chips can process large amounts of data more efficiently and cost-effectively than traditional semiconductors. Essentially, they are the ground for AI research.

You might be thinking that Nvidia won the lotto here by having GPU chips so perfectly suited for being the engine behind AI. Except, thanks to Jensen, being in this position was a decade in the making. The company’s chips broke through the AI community back in 2012 with AlexNet Neural Network, and it’s clear Nvidia made a massive bet on AI long before other companies did. As Jensen said in a keynote at the National Taiwan University in May, “We risked everything to pursue deep learnings.”

So, while Nvidia gets to ride the AI wave, it’s their GPU-powered deep learning that actually ignited modern AI in the first place, with their GPUs acting as the brains of computers, robots, and self-driving cars.

Simply, through a pick-and-shovel play (more on this soon), Nvidia is tightly coupled with most high-growth sectors, like AI, machine learning, VR/AR, big data, cybersecurity, gaming, crypto, and autonomous travel, as they all rely on Nvidia’s chips. Thus, when one of these high-growth sectors is running hot, so too will Nvidia.

In short, Nvidia’s products are at the center of the most consequential mega-trends in technology. And I have no doubt that they will continue to be at the forefront of the next revolution. At the end of this piece, we’ll touch on what that might be for Nvidia: Hint: Neuromorphic Computing. 😵💫

On that note, let's get to it.

Here’s what you can expect in today’s analysis:

How Nvidia Started: Denny’s Diner, Stumbled Steps, GPUs, and CUDA

Right direction, wrong product

Spotting an opportunity in gaming, crafting a winning GPU strategy, and building their base layer

Expanding beyond games, and unlocking industries with the CUDA platform

How They Grow: Powering The Next Stage Of The Internet

The Nvidia Data Center: One platform, unlimited acceleration

Chips & Systems: AIaaS— a classic Pick-and-Shovel play

Nvidia Omniverse: The platform for the useful metaverse

Seeding their own multi-market ecosystem: A lesson on expanding your TAM

What could be next for Nvidia? Neuromorphic Computing?

As we go, I’ve peppered in sections with actionable insights, noted with ⚒️🧠

Small ask: If you enjoy today’s post and learn something new, I’d be incredibly grateful if you helped others discover my writing. Hitting ❤️ in the header, or sharing/restacking, are all a huge help. Thank you!

How Nvidia Started: Denny’s Diner, Stumbled Steps, GPUs, and CUDA

The story of Nvidia is one that shows how engineering breakthroughs really do push our world forward.

There are a lot of places I could start telling it, but I think the most logical place is in a dingy diner covered in bullet holes, in the then-sketchy neighborhood of East San Jose, in April 1993. Three entrepreneurial electrical engineers—Jensen Huang, Chris Malachowsky, and Curtis Priem—sat drinking bottomless burnt coffee, deep in conversation, anticipating that the next wave of computing would be graphics-based.

Nobody would have thought their conversation about chips was laying the foundation for a company that was about to define computing—and still does.

As Chris recalled:

There was no market in 1993, but we saw a wave coming. There's a California surfing competition that happens in a five-month window every year. When they see some type of wave phenomenon or storm in Japan, they tell all the surfers to show up in California, because there's going to be a wave in two days. That's what it was. We were at the beginning.

The wave they saw coming was the nascent market for accessible GPUs, and how there was an opportunity to create plug-in chips for PCs that could provide realistic 3D graphics for games and films.

So, buzzed on coffee and their strong conviction that the next big opportunity in technology was in accelerated computing— a parallel processing approach that frees the computer’s Central Processing Unit (CPU) and gives the heavy lifting of data processing to other processors, like the GPU—they left Denny’s Diner to go and found Nvidia.

Things were in motion. But it took a few years for them to find their feet.

Right direction, wrong product

Even though Jensen implemented a highly successful strategy from 1998 to 2008—setting their trajectory to own the global chip market— Nvidia stumbled in their first steps.

The first product they built was a multimedia card called NV1, which was introduced in the market in 1995.

The founders leaned on the expected multimedia revolution at the time and tried to ride the wave and establish an industry standard. However, the NV1 was a bit of a flop. It wasn’t considered better than the competitors (Intel, and AMD), and it was hard to program. As a result, the games that were created for the NV1 weren’t great and had performance issues.

They got to work on a second version, the NV2, but ended up canning it before it went live.

Why?

Because they were not feeling a pull from the market, and while NV2 was in development, Jensen was running a strategic analysis that took into account the team’s, and company’s, existing strategic advantages, as well as crucial market observations from media industry experts like Ed Catmull, co-founder of Pixar.

He realized their current path was not the way, and he made the first big bet on Nvidia’s future.

So, four years in and with an actual product in the market paying the bills, he made a major strategic pivot and focused on the development of a product that he believed would be better suited for a new, exponentially growing, market.

Spotting an opportunity in gaming, crafting a winning GPU strategy, and building their base layer

Jensen and the team noticed an attractive opportunity in the field of 3D graphics, a sub-niche of their initial multimedia focus.

A good lesson on the exercise of niching down to find your sweet spot in the market. ⚒️🧠

They decided this was the market they needed to focus on, the real wave, and set out to design a Graphic Processing Unit (GPU) that would render 3D graphics. They dropped their initial approach to graphics they had created with the NV1 and adopted the then quickly-rising (and today’s standard) in 3D graphics processing—the graphics pipeline. This approach was in fact developed by a competitor, SGI.

So, why did the team decide to compete in this fast-paced and highly competitive arena of computational power? Why did they think this move would be successful? Two reasons: 👇

1. The market’s demand for GPUs was expected to be “unlimited”

Unlimited in the sense that it would surpass supply by a significant margin and wouldn’t slow down anytime soon.

This meant there was a massive TAM and a big enough slice for Nvidia to take.

The reason was the emerging video game industry, where (1) games had the most computationally challenging problems, (2) computational processing power was a competitive advantage for online video gamers, and (3) they believed video there would be incredibly high sales volume.

So, the better your GPU as a gamer, the better your performance, and the more bragging rights you’d have about your gaming skills.

This presented gaming as a strong wedge into the 3D graphics computational arena—which as we’ll see—became the company's core flywheel to reach other adjacent markets and fund huge R&D to solve massive computational problems.

2. They took a radically different position in the computational market

Remember, accelerated computing is all about improving a computer's processing power. The better it can process, the more advanced feats it can pull off. Back in the '90s and early 2000s, the main place processing was taking place was the PC's Central Processing Unit (CPU).

When Nvidia broke into this market, the main dog was Big Blue: Intel.

Intel's incumbent strategy was to minimize the CPU’s costs. AKA, to make the single horse pulling the chariot more efficient and more profitable to sell.

Nvidia’s strategy was to maximize the GPU’s performance. AKA, to add another type of workhorse to share the load. It increased the cost of the machine, but their go-to-market was to cater to the constantly growing need for faster graphics rendering.

To give a bit more context as to why Jensen’s bet was such an innovative and bold one, it’s helpful to understand the status quo at the time in the industry. In short, progress in computational power depended on decreasing the size of transistors and reducing consumed power. So, the first chip player who found a way to design a significantly smaller transistor and a way to manufacture it would gain a huge advantage in the market.

Sounds good, except that the rate of progress seemed to follow an observation by Gordon Moore, Intel’s co-founder, known as Moore’s law. It basically stated that significant progress in chips would occur every 2 years.

The whole industry relied on that law, accepting that nobody could really break it. At least not consistently. Which is kind of funny when you think about it: the leader of the incumbent company coining a law that in a way fends off competition. 👀

But, Nvidia’s radically different positioning in the computational arena was based on Jensen’s belief that Nvidia could actually provide an equivalent improvement of computational power in one-third of the industry’s cycle by focusing on increasing the number of transistors in their GPUs (not CPUs), taking full advantage of the graphics pipeline approach.

This approach was revolutionary, and their GPU strategy was so successful at setting up Nvidia’s advantage for 2 big reasons:

It enabled multiple lightning strikes within its category—driving big attention waves. Being able to announce a GPU that was 2 times as fast as the previous one, and 3X faster than competitors, meant they got a ton of large-scale marketing. This built their brand and allowed them to steal market share from Intel.

It enabled them to build a data and experience flywheel much faster. Thanks to their ultra-fast iteration cycles, Nvidia’s team gained experience in how to turn their technological designs into sellable products three times faster than their competitors. And since the available human capital in the industry was limited, experienced engineers were another benefit that amplified Nvidia’s competitive advantage—a cornered resource superpower.

With this, Nvidia created and won the GPU category. They went public in 1999 at 82c a share. (Today, they’re up 59,000%), and from 2000 to 2008, this strategy quadrupled their revenue, allowing their R&D flywheel to solve more technical challenges in computing.

The first big bet that paid off.

But, unfortunately, the physical limit in the chip world does exist, as you can only make a transistor so small.

So, despite forking in the cash and watching their public company’s stock price climb, Jensen saw the limit approaching. He saw Nvidia losing their coveted advantage. And being a badass who never rests on his laurels, he went back to the strategy board and maneuvered the ship once again. 👇

Expanding beyond games, and unlocking industries with the CUDA platform

From 1999 to 2006, Nvidia’s bread and butter was servicing the gaming market with their newly minted GPU hardware.

And this stage was foundational, not just for the company, but for human progress. Simply, because GPUs set the stage to reshape the computing industry.

But in 2006, they opened Pandora’s box to how GPUs could be used by launching CUDA—a general-purpose programming model that allowed developers to use their Nvidia GPUs for other things.

This was Jensen and Nvidia’s first platform strategy.

To drastically oversimplify it, this made the power of accelerated computing fully accessible, allowing smart people to unlock and harness their GPU’s processing ability beyond games. This breakthrough allowed for multiple computations at a given time instead of sequentially, resulting in much faster processing speeds—a game-changing move in the development of applications for various industries, like aerospace, bio-science research, mechanical and fluid simulations, climate modeling, finance, professional visualization, energy exploration, autonomous driving, and AI, which all require big number crunching to work.

In other words, CUDA was a monumental development in the industry that took Nvidia from a hardware product for gaming to an industry-opening platform.

Multi-market platforms and their network moat

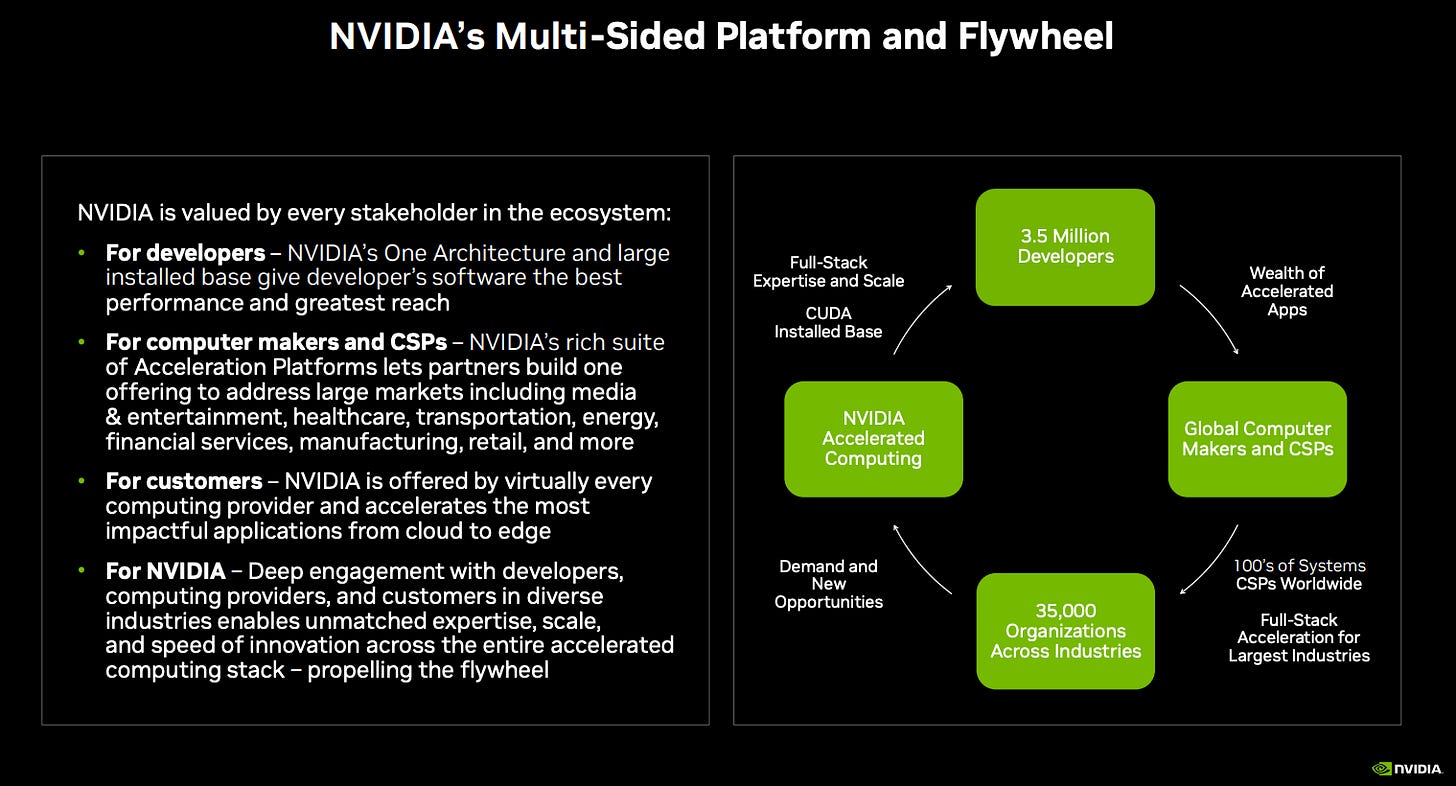

Nvidia’s first platform had three key players:

Software developers: GPUs are specialized hardware and need highly skilled hardware programmers to code. However, CUDA’s brilliance is that it brings the convenience of software development to specialized hardware products. When they launched in ‘06, Nvidia went all in chasing them down and getting them accustomed to the platform—knowing they were essential to the platform flywheel.

Hardware manufacturers: CUDA is all software, but it needs hardware to run with it. Jensen decided to keep this component closed, meaning only Nvidia Taiwanese-made GPUs can run CUDA. This turned out to be a fantastic move in (1) creating defensibility, and (2) providing superior performance to users as it allowed Nvidia to rapidly iterate on better designs and bring the best-in-class hardware-software integration to the developers.

Consumers: This includes all the customer profiles who would need fast computing.

This creates a nice multi-sided platform and flywheel, with more demand for chips and systems driving more developers and organizations into the ecosystem, in turn, solidifying Nvidia’s network effect.

That flywheel was, and still is, fueled further by two other elements:

Strong partnerships: Nvidia’s partnerships with other big tech companies (Microsoft, Amazon, IBM, etc.) are another competitive advantage. This was especially true in their GTM phase of CUDA, as it helped them reach new customers and markets. They’ve also partnered very closely with the game developer community, and this tight feedback loop has given them a massive advantage in the gaming industry, as is evident by Nvidia being the preferred choice by a landslide for game developers.

Robust sales and distribution channels: Nvidia sells their chips through a bunch of channels, including direct sales, original equipment manufacturers (OEMs), and e-commerce platforms.

As mentioned, their CUDAxGPU platform was general-purpose, enabling Nvidia to cut across various verticals and tap into the growth of different emerging sectors. In other words, if an industry like crypto took off, they’d be well-positioned to ride that wave. And when looking at a few of the big domains that CUDA specializes in supporting at the moment—gaming, creative design, autonomous vehicles, robotics—it’s clear these all have a long runway for growth for decades to come.

AKA, Nvidia is poised to provide for multiple gold rushes.

A testament to the power of solving hard problems in the background. 🙇♂️

What you can do with this ⚒️🧠

When demand is up, build your position in the arena from a different point of view

Study the market to spot emerging opportunities, don’t rest on your laurels, and study your competitors to understand their most important strategic decisions.

Then build a coherent strategy based on the following:

An understanding of where you are

A different point of view about what the big opportunity ahead is

An honest analysis of the challenges/problems that stand in your way

A fair assessment of your internal capabilities, and your advantages

Ask yourself, which industry rule can we challenge to generate a valuable, different position?

Now, if you’ve learned anything about Jensen so far—the man who has no problem betting the farm for a village—you know another big company gamble is coming along. 🎲

Here’s a quote by Jensen. It’s somewhat random to insert here, but I think it paints a great picture of who Nvidia’s captain is.

My will to survivor exceeds almost everybody else’s will to kill me

— Jensen

What a legend. 🫡

Now let’s see, pun absolutely intended, how the chips landed with his 2012 bet.

How They Grow: Powering The Next Stage Of The Internet

Over a decade ago, in a more suave setting than a bullet-ridden Denny’s Diner, the Nvidia founders reviewed the landscape yet again and spotted the next big wave: The data center.

Today, it’s Nvidia’s biggest driver of growth, and coupled with their Omniverse and AI platforms, it plays a crucial role in the building of the 3D Internet (what Nvidia calls the metaverse) and AI development.

The Nvidia Data Center: One platform, unlimited acceleration.

So far, we’ve covered how there were two programmable (AKA, follows specific instructions) processors:

The CPU: Intel’s main competency, with transistors focused on linear computing. For years, it was the sole programmable element of a computer.

The GPU: Nvidia’s expertise, with transistors focused on parallel computing. Initially used for rendering 3D graphics, GPUs’ processing efficiencies make them ideal for things like crypto and AI.

The problem—as Jensen saw coming—with both of them is that they’ve reached their physical upper limit of computational capabilities. He also knew that operating at the limit wasn’t going to be good enough.

For instance, back in 2012, Nvidia sparked the era of modern AI by powering the breakthrough AlexNet neural network. They knew AI was coming long before it was hot, and they knew the type of horsepower the engine for it would demand.

So, seeing that (1) the limit was nearing, and (2) there would be demand for computational power far beyond what single PCs or even servers could provide, he leaned into Nvidia’s expertise to invest heavily in a new, third, class of programmable processors:

The DPU (Data Processing Unit). To avoid the technical mumbo-jumbo here, just know that these chips are ultra-efficient in data functions and are the building blocks of hyper-scale data centers. The DPU is at the center court of the computational arena today, with insane demand since it powers AI.

As Elon Musk said to the WSJ…they are considerably harder to get right now than drugs.

And I trust him. 😉

Essentially, Nvidia realized the future of accelerated computing was going to be a full-stack challenge, demanding a deep understanding of the problem domain, optimizing across every layer of computing, and all three chips—GPU, CPU, and DPU.

But as we’ve seen multiple times in this newsletter—selling products alone doesn’t get you to the 4-comma club. Although, selling a chip with unparalleled demand at $30K a pop won’t hurt you.

No, only building an integrated platform will get you there.

Which is exactly what they’ve done. In partnership with AWS, they’re building out the world's most scalable, on-demand cloud AI infrastructure platform optimized for training increasingly complex large language models (LLMs) and developing generative AI applications.

But Nvidia’s massive data center strategy isn’t their only platform play besides CUDA. 🤔

Nope. So let’s talk about the other two:

Nvidia’s AI Platform-as-a-Service

Nvidia’s Omniverse Platform

⚠️ This post is about to get cut short if you’re reading this in your email. Click here to keep reading.

Chips & Systems: AIaaS— a classic Pick-and-Shovel play

The Pick-and-Shovel strategy is a nod to the California gold rush in the 1800s, where there were two types of people: Those who looked for gold, and those who sold the tools (picks and shovels) to mine it.

The latter folks not being in the business of caring whether they found it or not, since selling the tools was a sure-fire way of making money without the risk of investing in finding gold, which had huge uncertainties.

In the world of AI, Nvidia is in the same supply chain business. And not just by providing the hardware and data centers (Chips) to support all the AI players, but by selling builders access to their computation services and software to actually build and train their AI models (Systems).

It’s called the Nvidia DGX Cloud, and it’s an AI supercomputer accessible from your browser. 👀

The DGX Cloud service already includes access to Nvidia AI software, AI frameworks, and pre-trained models. Through it, Nvidia is also providing tools for building, refining, and operating custom large language and generative AI models.

At a high level, their full-stack AI ecosystem looks like this:

AI Supercomputer: An all-in-one AI training service that gives enterprises immediate access to their own cloud-based supercomputers

AI Platform Software: The software layer of the Nvidia AI platform, Nvidia AI Enterpriseᅠpowers the end-to-end workflow of AI. Simply put, it streamlines the development and deployment of production AI.

AI Models and Services: Nvidia AI Foundations are cloud services within the supercomputer for customizing and operating text, visual media, and biology-based generative AI models. Think of them like Shopify plugins that make the Shopify platform more flexible and useful.

Nvidia’s goal, simply, is to solve as many problems as deeply as possible in the journey of creating AI products. They’re not worried about who the next Chatbot winner is, because whoever it is, will be built off Nvidia’s engine.

Very clearly, they are at the outset of building an AI-as-a-service (AIaaS) business component, and it is having a fundamentally transformative impact on the business, and more significantly, the entire AI industry.

As The Motley Fool wrote, this early leadership could turn into a lasting competitive advantage, creating a defensible flywheel for:

Nvidia's leadership position in advanced processing hardware already looks quite strong and hard to disrupt, but the company may be in the early stages of building another powerful competitive advantage. While algorithms and processing hardware play very important roles in constructing and running AI systems, data can be thought of as the other key component. As AI models are trained with a greater array of relevant valuable data, they tend to become more effective and capable.

By establishing itself as an early leader in AIaaS offerings, Nvidia is positioned to generate huge swaths of valuable data that help to inform and improve its own artificial intelligence capabilities. Through these improvements, the company should be able to deliver better services for its customers.

In turn, this will once again generate more valuable data for the company, setting up a network effect and virtuous cycle that could turn early leadership in AI services into a long-term competitive edge that competitors find very difficult to disrupt.

Looking at Nvidia’s success and rigor in being strategically nimble, I’m betting this is exactly what will happen.

Now, combining their AIaaS with Nvidia Omniverse (below), we see that Nvidia has created a two-piece platform-as-a-service play.

What you can do with this ⚒️🧠

Anticipate industry shifts: Nvidia foresaw the limitations of CPUs and GPUs and invested in the DPU before the demand skyrocketed. Always be forward-thinking and anticipate where your industry is headed.

Diversify your offerings: Nvidia didn't just rely on selling chips; they built an integrated platform. Diversifying your product or service offerings can open up new revenue streams and make your business more resilient.

Leverage partnerships: Nvidia's partnership with AWS allowed them to build a scalable AI infrastructure platform. Find the right industry leaders or complementary businesses to amplify your reach and capabilities.

Platforms, not just products: Platform plays, as we’ve seen time and again, can lead to exponential growth. Platforms create ecosystems where third-party developers or businesses add value, leading to huge network effects.

Solve multiple Jobs-to-be-done: Nvidia's full-stack AI ecosystem is designed to simplify the AI development process for developers. Always think about how you can remove friction and make it easier for your customers to achieve their goals.

Build for the long-term: Nvidia's investments in AIaaS and their platform strategy are long-term plays that could give them a lasting competitive advantage. A great example of how to apply long-term thinking is here: Ants & Aliens: Long-term product vision & strategy

Stay nimble: Despite being massive, Nvidia has shown agility in their strategic decisions and ability to pivot into new areas. Regardless of size, be mindful of the traps that lead companies into the innovator’s dilemma.

Nvidia Omniverse: The platform for the useful metaverse

If Nvidia AI is their answer to every artificial intelligence question, then Omniverse is Nvidia’s answer to every metaverse question—the 3D evolution of the internet.

It’s a platform (also a chips-and-systems play) for virtual world-building and simulations, focused on enterprise customers. It’s based on Universal Scene Description (USD) technology, which, I know, means nothing to either of us. All you need to understand is that it’s the solution to everything 3D-related. Originally invented by Pixar, USD is well positioned to become the open standard that enables 3D development.

Reading up on their Omniverse platform, and it’s clear they have 3 main objectives here:

Make Omniverse the go-to platform for Augmented Reality and Virtual Reality (AR/VR) development

Sign up major partners to use Omniverse

Create a strong and defensible ecosystem around Omniverse

To get there and turn those goals into an actionable strategy, they’re implementing three tactics:

In order to become the prevalent system behind every 3D project, they’re focusing on offering the best simulation tech to the biggest companies and supporting every business that builds applications on top of Omniverse.

In order to make 3D content creation and sharing as easy as possible, they’re focusing on individual and team collaboration tools for real-time interaction with partners and clients, world-building tools, a library of prebuilt objects and environments, compatibility with other sources of graphics and formats, and the leading game engines, Unreal and Unity.

They’re securing partnerships with major cloud service providers (Azure, AWS, Google Cloud) to adopt Nvidia’s tech and architecture, essentially forcing the competition to use their technology, in turn, almost eliminating it.

The customer here, to be clear, is not game developers. Nvidia is not thinking about the metaverse in the gaming or social context. Although, their hardware is certainly involved in powering those. 👏

Rather, they’re betting on the more practical and realistic metaverse by looking at players like:

Artists of 3D content

Developers of AIs trained in virtual worlds

Enterprises that require highly detailed simulations

AKA, think robotics, the auto industry, climate simulations, space industry design and testing, virtual training, etc. In short, their Omniverse X AI platforms are turbocharging science.

One epic example of this is how Nvidia is creating a digital twin of the planet—named Earth-2—to help predict climate change decades in advance. It’s well worth the 1 minute 29s watch of Jensen explaining how their supercomputer is helping us save the world. 🌎

Super cool.

Like I said, Nvidia is at the forefront of some of the most consequential initiatives and is probably the best example we’ve seen of how engineering innovations really do move the world forward.

What you can do with this ⚒️🧠

When your category evolves, double down on your capabilities. Just because your previous strategic advantage can’t be maintained, it doesn’t mean it’s worthless.

The benefit of being a category queen is that:

You identify your category’s evolution early

You get to convert your advantage to serve you in the emerging new category

You have two enemies when your category evolves:

Complacency: falsely believing that you can sustain your current competitive advantage (see The Innovators Dilemma)

Overreaching: abandoning your advantage and trying to build new capabilities from zero

Okay, so just to regroup real quick, we’ve seen 3 key platforms (all relying on their hardware) that Nvidia uses to drive their growth.

Their CUDA platform gives them exposure to various general domains (gaming, automotive)

Their Data Centers + AI Platform gives them exposure to the rapidly growing AI sector

Their Omniverse Platform gives them exposure to the professional metaverse

As they called out in a recent investor presentation, that’s a big fucking TAM.

But as we’ve seen already with winning platforms like Stripe (read deep dive), Shopify (read deep dive), and Epic Games (read deep dive)—the best of them don’t just capture markets super effectively, but, they actively work to make their markets bigger.

Shocker, but with a market cap of 7X those three companies combined, Nvidia is no different. 👇

Seeding their own multi-market ecosystem: A lesson on expanding your TAM

Nvidia grows their own market in three powerful ways:

By incubating AI startups

By investing in companies integrated with the Nvidia ecosystem

By offering formalized training and expertise development for their chips and systems.

Spearheaded by our main character, Jensen, these initiatives are prime examples of his commitment to fostering innovation, providing scale to their business, and positioning Nvidia as the leader in the AI, data science, and high-performance computing (HPC) arenas.

Let’s run through them real quick.

1. Nvidia’s Inception AI Startup Program

Inception is a free accelerator program designed to help startups build, grow, and scale faster through cutting-edge technology, opportunities to connect with venture capitalists and other builders, and access to the latest technical resources and expertise from Nvidia.

All in, they have over 15K startups that have been incubated in this program. And what’s interesting given you’ve never heard of it, is that those startups have raised an on-par amount of funding as the market cap of TechStars’s portfolio companies. (~$100B)

This is a powerful investment strategy that remains largely under-appreciated, as it drives adoption of their own hardware and software (locking in the next wave of startups to Nvidia), as well as gives Nvidia exposure to startups now more likely to flourish and push the boundaries of AI, data science, and HPC.

And then, as part of Nvidia’s “seed the ecosystem” platform, they recently launched an arm focused on driving bigger investments into these startups.

As you can tell, Jensen loves a platform.

2. Nvidia’s Venture Capital Alliance Program

Here is what Nvidia said about the program when they launched it in 2021:

To better connect venture capitalists with NVIDIA and promising AI startups, we’ve introduced the NVIDIA Inception VC Alliance. This initiative, which VCs can apply to now, aims to fast-track the growth for thousands of AI startups around the globe by serving as a critical nexus between the two communities.

AI adoption is growing across industries and startup funding has, of course, been booming. Investment in AI companies increased 52 percent last year to $52.1 billion, according to PitchBook.

A thriving AI ecosystem depends on both VCs and startups. The alliance aims to help investment firms identify and support leading AI startups early as part of their effort to realize meaningful returns down the line."

TheᅠNVIDIA Inception VC Alliance is part of theᅠNVIDIA Inception program, an acceleration platform for over 7,500 startups (now 15,000 in 2023) working in AI, data science and HPC, representing every major industry and located in more than 90 countries.

Among its benefits, the alliance offers VCs exclusive access to high-profile events, visibility into top startups actively raising funds, and access to growth resources for portfolio companies. VC alliance members can further nurture their portfolios by having their startups join NVIDIA Inception, which offers go-to-market support, infrastructure discounts and credits, AI training through NVIDIA’s Deep Learning Institute, and technology assistance.

Again, this furthers the same advantage Nvidia gets from their accelerator—it helps drive the scale of their customers. As they grow, so does Nvidia’s TAM.

3. Nvidia’s Deep Learning Institute

I love it when companies layer in education and training plays. And for a company like Nvidia, which needs more than a thoughtful onboarding experience to get a new customer setup, having meaningful learning material and access to exert resources is crucial.

The creation of their Deep Learning Institute (DLI) has proven to be a super valuable initiative with lots of benefits for Nvidia’s business and the wider community. Most significantly, it’s become a key driver in advancing knowledge and expertise in AI, accelerated computing, data science, graphics, and simulation.

Yet again, ensuring Nvidia’s flag is right there at the forefront of breakthrough thinking and innovation across most high-growth, deep-tech, sectors.

Through these three hidden gems, Nvidia demonstrates that they have been brilliant at creating scale for their business through partnering, investing, co-creating, and teaching their customers and partners their technologies to solve real-world problems.

What you can do with this ⚒️🧠

It’s rare to be in a position where you can grow your TAM through investing and incubating startups. Your business needs to be substantially large to do that. But here are a few other takeaways Nvidia bring us that are more realistic for most of us reading:

Think beyond direct sales: Nvidia's approach demonstrates that market leadership isn't just about selling products. It's about creating an ecosystem where your products become indispensable. The sweet spot: you’re able to create an environment where your product is deeply integrated into the fabric of an industry.

Innovate across multiple fronts: Nvidia isn't just innovating in terms of products. They're innovating in terms of how they engage with startups, how they connect with VCs, and how they educate the market. Always be thinking about how you can innovate across multiple fronts to drive growth and market leadership.

Layer in education: Offering educational resources or training can help customers get more value from your products and can position your company as a thought leader. Consider how you can educate your customers, whether it's through online courses, webinars, or other resources.

Create alliances with VCs: If you're in a high-growth industry, consider how you can facilitate connections between investors and startups that use or complement your products.

A rising tide lifts all boats: Nvidia actively works to make their market bigger by supporting their customers' growth. Always be thinking about how you can expand your TAM, whether it's by entering new markets, creating new use cases for your products, bringing non-customers into the market, or driving innovation in your industry that attracts investment.

And to cap us off for today’s analysis. 👇

For the curious…

What could be next for Nvidia? Neuromorphic Computing? 🧠

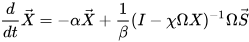

Okay, so to understand neuromorphic computing (NC), all you need to know is this formula:

Juust kidding.

In short, the answer is much simpler: The future of chips is making physical computers think more like human brains, where chips use the same physics of computation as our own nervous system.

The term "neuromorphic" comes from the Greek words "neuron" (meaning nerve cell) and "morphe" (meaning form). In the context of computing, it refers to the use of electronic circuits and devices inspired by biological neurons' structure and function.

While still nascent, this is another massive technological feat. You might be thinking—but we’ve done something similar already with AI neural networks, what’s the difference?

Simply, we’ve made great progress on the software side of things in terms of mimicking the logic of how a human brain thinks. But solving those challenges on a physical chip is a different beast.

That’s what NC is solving though, and you can imagine how much more advanced our AI and computing will be when we have the chips and software both working in unison like a brain. 🧠

And just to illustrate the monumental difference on the chips’ side:

Your computer operates in binary. That’s 0s and 1s; Yes and Nos. It’s rigid, so, the code we use and the questions we ask these kinds of machines must be structured in rigid way.

With NC though, we go from rigid to flexible, as these chips will bring computers to the ability to have a gradient of understanding. I’m no engineer, but from what I’ve read, that’s huge.

Here are a few of the breakthrough benefits NC has pundits excited about:

It promises superior processing speed

It has the ability to solve more complex problems (and faster)

It can learn and adapt in real time. Compared to traditional AI algorithms, which require significant amounts of data to be trained on before they can be effective, NC systems can learn and adapt on the fly.

Neuromorphic computing is more energy efficient. Great for lowering the environmental impact, and also enabling AI systems to be deployed in resource-constrained areas.

NC allows us to create AI systems capable of true creativity and artistic expression that can learn and adapt in ways similar to human cognition.

One example, it has the potential to enable hyper-realistic generative AI.

NC provides privacy and security advantages.

NC is poised to be vital for developing AI systems that are more resilient to noise and errors

In more succinct words: Neuromorphic Computing is the key to huge leaps in AI advancements.

Just imagine how fast things would be changing in the AI landscape if these were powering Nvidia’s data centers and AI platforms.

It sounds somewhat far-fetched, but that future is already here and in the works.

As was published in this research paper in Nature:

With the end of Moore’s law approaching and Dennard scaling ending, the computing community is increasingly looking at new technologies to enable continued performance improvements. Neuromorphic computers are one such new computing technology. The term neuromorphic was coined by Carver Mead in the late 1980s1,2, and at that time primarily referred to mixed analogue–digital implementations of brain-inspired computing; however, as the field has continued to evolve and with the advent of large-scale funding opportunities for brain-inspired computing systems such as the DARPA Synapse project and the European Union’s Human Brain Project, the term neuromorphic has come to encompass a wider variety of hardware implementations.

And builders are going after it. Intel is already working on these chips, as are various other startups.

In the long term, NC poses a technological obsolescence risk to traditional GPUs and DPUs. If these types of chips become successful, it could threaten Nvidia's business.

However, because NC has the potential to be a game-changer in many different areas of society, and its consequences could be far-reaching and complex, I have zero doubt in my mind that Jensen and his crew are sitting in a Denny’s somewhere, dice in hand, and mapping out Nvidia’s strategic future over some burnt coffee. ☕

And that, folks, brings us to the end of our Nvidia analysis.

Given how large and complex Nvidia is as a company, ironically, I deliberately kept this shorter than other deep dives so we didn’t risk getting lost in the weeds.

I hope that strategy paid off and that you found this post insightful and enjoyable. 🙏

If you did—and you made it all the way down here to the deep dark footer section—I’d be incredibly grateful if you gave this post a like, share, or just spread the word about HTG to some friends.

As always, thanks for spending time with me here today. I appreciate it.

Until next time.

— Jaryd ✌️

Just saw this post on LI by Dan Hockenmaier that adds more color to Jensen, and some straight up useful takeaways.

[I quote Dan below]

The way that Jensen Huang runs Nvidia makes you question everything we think we know about how a company should operate:

𝐇𝐞 𝐡𝐚𝐬 40 𝐝𝐢𝐫𝐞𝐜𝐭 𝐫𝐞𝐩𝐨𝐫𝐭𝐬 𝐚𝐧𝐝 𝐧𝐨 1:1𝐬

- Believes that the flattest org is the most empowering one, and that starts with the top layer

- Does not conduct 1:1s - everything happens in a group setting

- Does not give career advice - "None of my management team is coming to me for career advice - they already made it, they're doing great"

𝐍𝐨 𝐬𝐭𝐚𝐭𝐮𝐬 𝐫𝐞𝐩𝐨𝐫𝐭𝐬. 𝐈𝐧𝐬𝐭𝐞𝐚𝐝 𝐡𝐞 "𝐒𝐭𝐨𝐜𝐡𝐚𝐬𝐭𝐢𝐜𝐚𝐥𝐥𝐲 𝐬𝐚𝐦𝐩𝐥𝐞𝐬 𝐭𝐡𝐞 𝐬𝐲𝐬𝐭𝐞𝐦"

- Doesn't use status updates because he believes they are too refined by the time they get to him. They are not ground truth anymore.

- Instead, anyone in the company can email him their "top five things" with whatever is top of mind, and he will read it

- Estimates he reads 100 of these everyone morning

𝐄𝐯𝐞𝐫𝐲𝐨𝐧𝐞 𝐡𝐚𝐬 𝐚𝐥𝐥 𝐨𝐟 𝐭𝐡𝐞 𝐜𝐨𝐧𝐭𝐞𝐱𝐭, 𝐚𝐥𝐥 𝐨𝐟 𝐭𝐡𝐞 𝐭𝐢𝐦𝐞

- No meetings with just VPs or just Directors - anyone can join and contribute

- "If you have a strategic direction, why tell just one person?"

- "If there is something I don't like, I just say it publicly"

- "I do a lot of reasoning out loud"

𝐍𝐨 𝐟𝐨𝐫𝐦𝐚𝐥 𝐩𝐥𝐚𝐧𝐧𝐢𝐧𝐠 𝐜𝐲𝐜𝐥𝐞𝐬

- No 5 year plan, no 1 year plan

- Always re-evaluating based on changing business and market conditions (helpful when AI is developing at the pace that it is)

This org is optimized for (1) attracting amazing people, (2) keeping the team as small as it can be, and (3) allowing information to travel as quickly as possible.

--end quote

Source: https://www.linkedin.com/posts/dan-hock_the-way-that-jensen-huang-runs-nvidia-makes-activity-7107373520761884672-w-vJ?utm_source=share&utm_medium=member_desktop

Amazing!